Apple’s intention to scan users‘ phones and other devices for photos portraying child sexual abuse sparked outrage over privacy issues, prompting the company to postpone the program.

Apple, Facebook, and Google, among others, have long examined their users‘ photos stored on their servers for this material. Scannable data on users‘ devices is a huge advancement.

Regardless matter how well-intentioned, and regardless of whether Apple is willing or able to keep its commitments to preserve customers‘ privacy, the company’s proposal demonstrates that people who buy iPhones are not lords of their gadgets. Additionally, Apple has a convoluted scanning technique that is difficult to audit. Thus, users are confronted with a harsh reality: If they use an iPhone, they must trust Apple.

Customers are compelled to trust Apple to utilize the system exactly as specified, to maintain the system securely over time, and to prioritize the interests of their users over the interests of third parties, even the world’s most powerful governments.

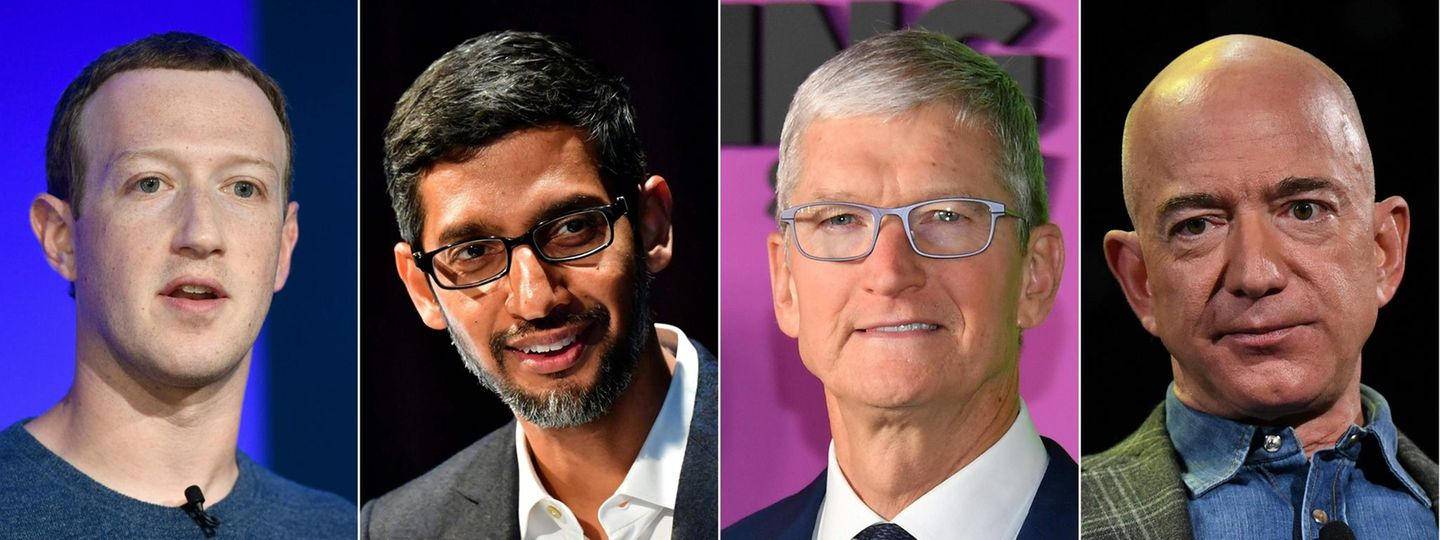

Despite Apple’s thus-far-unique strategy, the issue of trust is not exclusive to Apple. Other huge technology corporations have a similar level of influence over their clients‘ devices and access to their data.

What is trust?

According to social scientists, trust is „a party’s readiness to be vulnerable to the acts of another party.“ The decision to trust is based on experience, indicators, and signals. However, prior behavior, promises, the manner in which someone behaves, evidence, and even contracts provide merely data points. They make no assurances about future action.

As a result, trust is a function of probability. When you trust someone or an organization, you are effectively playing the dice.

Trustworthiness is a concealed characteristic. Individuals gather information about another’s likely future behavior, but cannot be certain whether the individual has the capacity to keep their word, is truly beneficent, and possesses the integrity – principles, processes, and consistency – to maintain their behavior over time, under pressure, or when the unexpected occurs.

Trust in Apple and Big Tech

Apple has indicated that their scanning method will be used exclusively for the purpose of detecting child sexual abuse material and includes numerous robust privacy safeguards. The system’s technical specifications show that Apple has taken precautions to ensure user privacy unless the system detects the targeted item. For instance, humans will assess someone’s dubious content only when the technology discovers the item a certain number of times. However, Apple has provided scant evidence of how this approach will operate in practice.

After researching the „NeuralHash“ algorithm on which Apple bases its scanning system, security researchers and civil rights organizations warn that, contrary to Apple’s assertions, the system is likely vulnerable to hackers.

Additionally, critics fear that the system will be used to scan for other types of information, such as signs of political opposition. Apple, along with other major technology companies, has bowed to authoritarian governments, most notably China, in order to enable for government surveillance of technology users. The Chinese government, in practice, has access to all user data. What will be unique this time around?

Additionally, it should be highlighted that Apple is not operating this system independently. Apple intends to use data from the nonprofit National Center for Missing and Exploited Children in the United States and to flag suspicious material to the organization. As a result, trusting Apple is insufficient. Additionally, users must trust the company’s partners to act in a compassionate and ethical manner.

Big Tech’s less-than-encouraging track record

This case occurs against the backdrop of ongoing Big Tech privacy intrusions and efforts to further restrict consumer freedoms and control. Although the firms have positioned themselves as responsible parties, many privacy experts argue that there is insufficient transparency and technical or historical evidence to support these claims.

Unintended effects are another source of concern. Apple may genuinely wish to safeguard youngsters while also protecting consumers‘ privacy. Nonetheless, the firm has now declared – and staked its credibility on – a technology that is well-suited to mass surveillance. Governments may enact legislation to broaden scanning to include other items deemed illegal.

Would Apple, and possibly other technology companies, opt to disregard these restrictions and so exit these markets, or would they comply with potentially draconian local legislation? There is no way to predict the future, yet Apple and other technology companies have already acquiesced to tyrannical regimes.

For example, technology businesses that choose to operate in China are compelled to adhere to censorship.

Weighing whether to trust Apple or other tech companies

There is no one-size-fits-all solution to whether Apple, Google, or their competitors can be trusted. Risks vary according on who you are and where you are located in the world. In India, an activist faces distinct threats and dangers from an Italian defense attorney. Trust is a probabilistic endeavor, and dangers are not only probabilistic, but also situational.

It’s a matter of how much risk of failure or deception you’re willing to accept, what threats and risks are relevant, and what protections or mitigations are available. Relevant factors include your government’s position, the existence of strong local privacy laws, the strength of the rule of law, and your own technical capability. Nonetheless, there is one constant: Typically, technology companies have tremendous access over your equipment and data.

As is the case with all large organizations, technology firms are complex: Employees and management are constantly changing, as are regulations, policies, and power dynamics.

Today, a business may be trustworthy, but not tomorrow.

Big Tech has demonstrated practices in the past that should make customers doubt their reliability, most notably with privacy concerns.

However, they have defended user privacy in other instances, such as the San Bernadino mass shooting case and subsequent encryption debates.